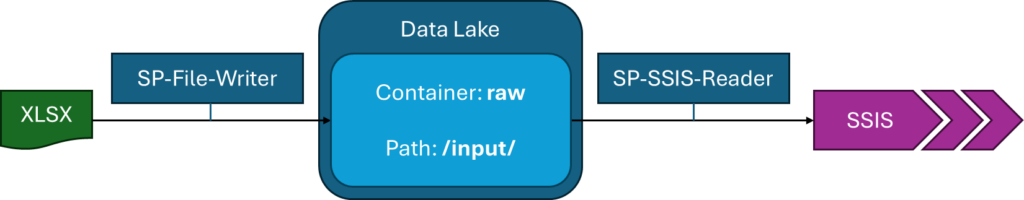

While new data modern data warehouse projects are often started with cloud based ETL products like Azure Data Factory, Synapse or Fabric pipelines, many companies still have a large investment in dataflows designed in SQLServer Integration Services. However, the datasources from which SSIS has to read are move increasingly to REST APIs or data lakes, services that were not around in the starting days of SSIS. With the split between storage and compute, the choice for a datalake for data storage is usually easily made.

This article will focus on how we integrate SSIS with datalake storage by connecting to datalakes, so we can still develop in the existing technology stack, but be open for future migrations to more modern platforms like Fabric.

In this use case we intend to read data from an Azure Data Lake Storage account Gen 2 with SSIS. To set this up properly from a security point of view, we’ll not use an Account Key (which is equivalent to the “admin” password for your datalake), but a person independant access using Service Principals.

The next sections detail the configuration of each of these 3 components:

- Service Principals

- Data Lake

- SSIS

Service Principal

The configuration of the Service Principal or App Registration is very standard, so I’ll summarize the essential steps:

- In Azure portal, EntraID, App Registration click “+ New registration”

- The accounts supported you can leave default to “Accounts in this organizational directory only”

- Note down the Application ID and Tenant ID

- In Certificate & Secrets, create a secret

- Note down the secret value

For this tutorial, we’ll create 2 service principals:

- SP-File-Writer : this security context will be used by the team/application/… that will provide files to your datalake. It will have specific restrictions on where in the datalake it can write files

- SP-SSIS-Reader : this security context will be used when connecting from SSIS to the datalake to read the files. It can be used by any of the SSIS processes.

Datalake configuration

Find below the names I’ll use throughout the setup

- Azure Data Lake Storage Gen2 = axisdl

- Container = raw

- Path = input

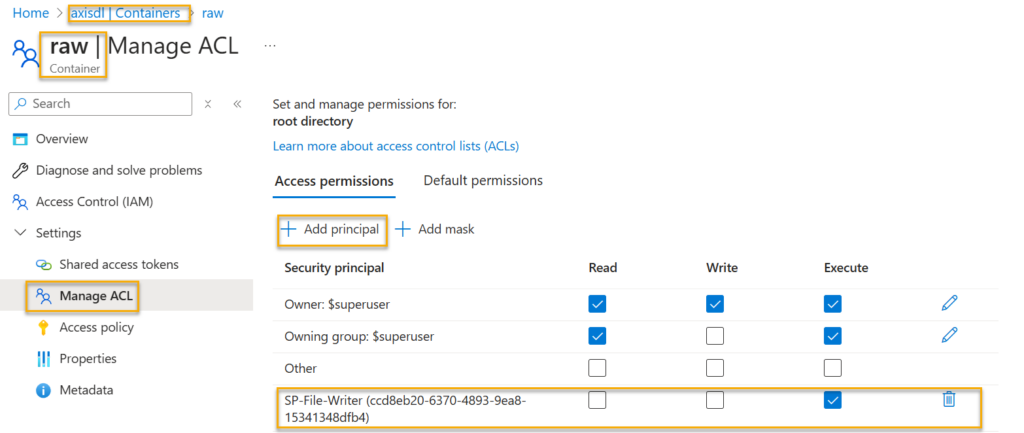

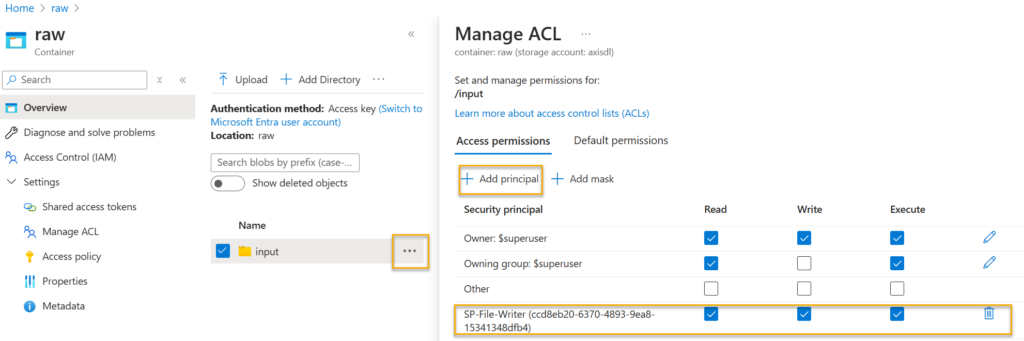

Setup SP-File-Writer permissions

We want to provide read + write access to a folder in the datalake for SP-File-Writer.

- Go to the container and expand settings, Manage ACL

- Click Add principal and when added, check the execute permission. The container raw (and every directory on the path to the final folder) needs to have an execute ACL assignment

T

- The folder input needs to have read/write/execute ACL assignment. Find the menu “Manage ACL” in the … behind the folder name

No RBAC roles needs to be assigned for this Service Principal, as these will bypass ACLs and potentially give much broader access.

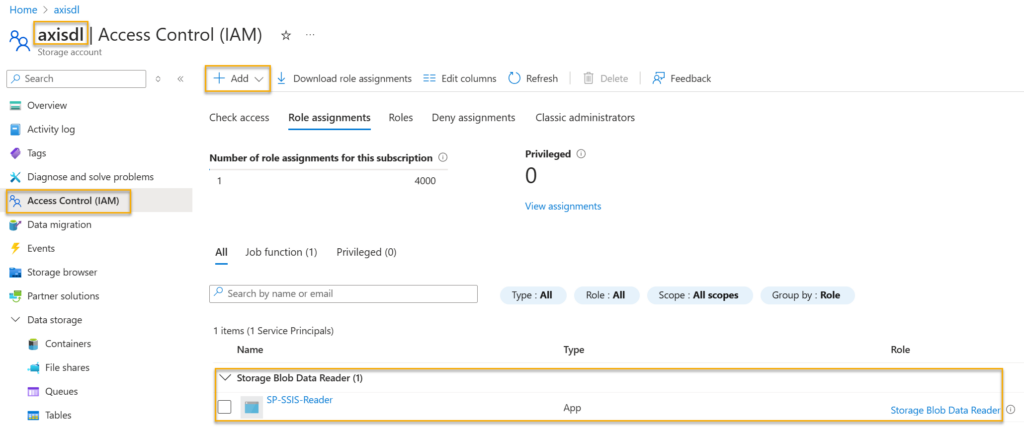

Setup SP-SSIS-Reader permissions

We want to provide full read access on the datalake to SP-SSIS-Reader.

The access for SP-SSIS-Reader (used by SSIS to download files) will be handled by RBAC role Storage Blob Data Reader. If you also need to delete or move files, use the Storage Blob Data Contributor role instead.

Assign the role in Access Control (AIM) at the level of the storage account.

- Click Add+ and select add role assignment.

- Select the role Storage Blob Data Reader and click Next

- Select the SP-SSIS-Reader in the members.

- Review and complete.

General configuration settings

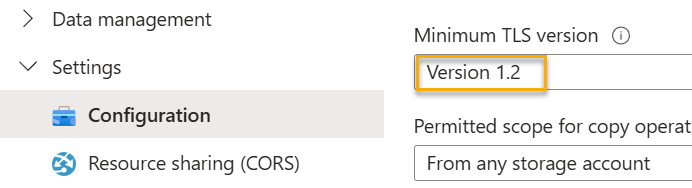

Make sure that in Settings / Configuration the Minimum TLS version is set to 1.2, as older versions will no longer be supported after July 2025.

SSIS Configuration

Install Azure Feature pack for SQLServer 2019

Download the Azure Feature Pack for Integration Services from the Microsoft Download Center. Make sure you select the right version for the version of your Visual Studio, e.g. 2019

The installer will complain about not finding a 64 bit version of SSIS. This is ok, as Visual Studio is just 32 bit execution runtime of SSIS. When running on the application server, you’ll install these components there as well, and both runtimes will be installed.

Note: If you start Visual Studio and you don’t see the new components installed, don’t panic. Make sure to sure set the « TargetVersion » of your project to the same as the feature pack you installed, e.g. SQLServer 2019. By default a new project for me was created with Version 2022, which was not showing the newly installed components.

Prepare host environment

Enable TLS 1.2 support

Make sure you have TLS 1.2 support enabled in the registry by adding following keys:

In HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft.NETFramework\v4.0.30319

SchUseStrongCrypto REG_DWORD 1

SystemDefaultTlsVersions REG_DWORD 1

In HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft.NETFramework\v4.0.30319

SchUseStrongCrypto REG_DWORD 1

SystemDefaultTlsVersions REG_DWORD 1

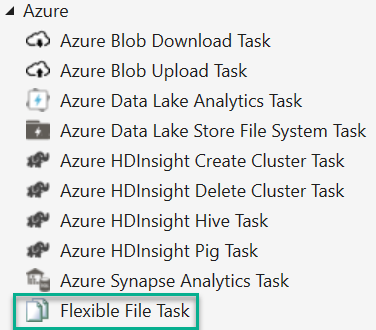

Flexible File Task setup

There are many components available in the feature pack, which have similar configuration and features. This example focusses on downloading files from a datalake, not directly consuming/writing data from CSV files, for which the other source and destination components are better suited. I can add a write-up of those as well, but the Flexible File Task posed the most problems to get working correctly that all pitfalls are probably covered with this one.

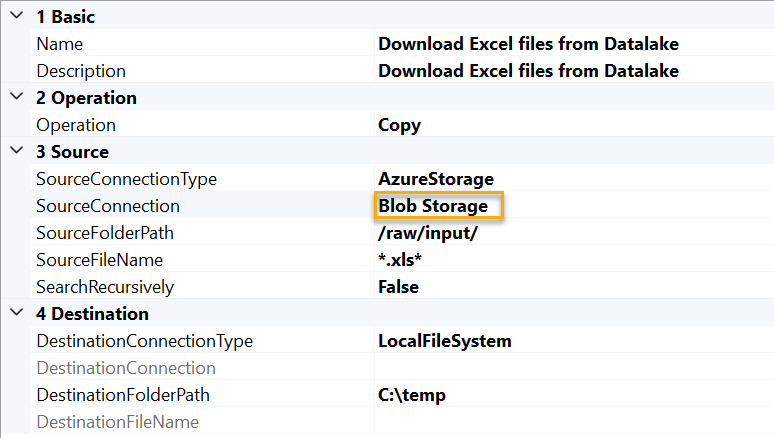

Configure the component

The configuration should be made as follows to download e.g. Excel files from a datalake using SSIS

Blob Storage is the name of the connection manager, which is of type Azure Storage Connection.

Note to provide the path in the form /container/directory/

The FileName allows for various filter types, using *.xls* will take any files with a suffix starting with xls, so covering for xlsx as well.

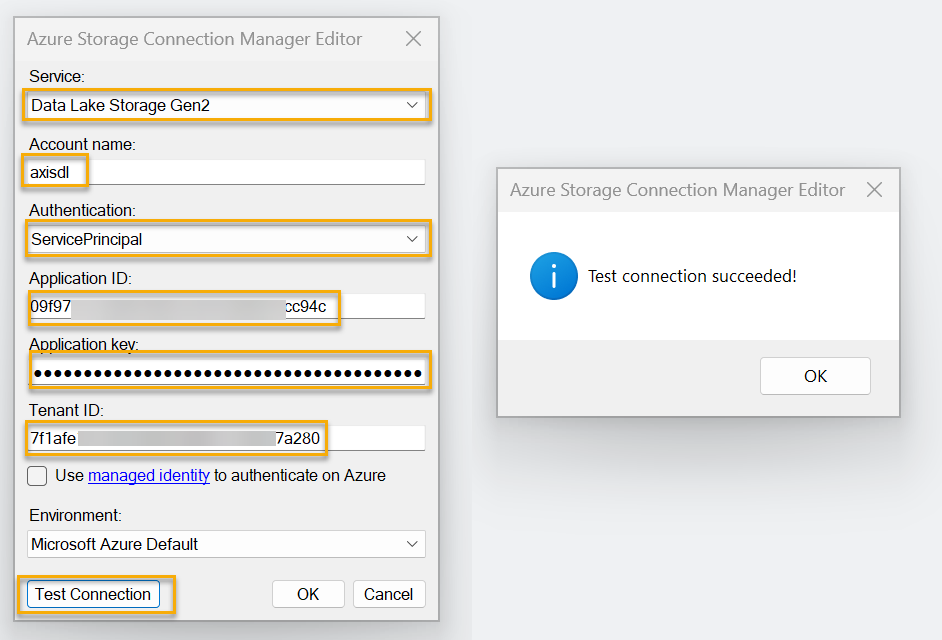

Configure Connection Manager

To configure the connection manager fill out:

- account name

- application ID = client ID of the SP-SSIS-Reader service principal

- authentication = ServicePrincipal

- application key = secret of the SP-SSIS-Reader

- tenant ID = also noted earlier from the SP-SSIS-Reader

After configuring all, you can press test connection. If the RBAC right was correctly setup (and these parameters are correct), you’ll get a succes message.

Note: Test Connection will only work when the RBAC role is assigned, but the Flexible File Task component will also work when only ACLs are used without an RBAC role.

Strictly spoken, RBAC is not necessary, since when the role is missing, the ACL assignment for the Service Principal are checked, allowing fine grained access to a folder in a container. Assigning RBAC role ignores the ACLs assignments and the account has access to all. You could as such run this component without the test connection working.

Other components

Azure Blob Download Task is meant for Azure Blob Storage endpoint. The connector seems to allow Data Lake Storage Gen2, but is then not usable in the component. (don’t use to connect to Gen2) – in fact, it warns in the title (which is easy to overlook).

Azure Data Lake Store File System Task can only connect to Data Lake Storage Gen1, also mentioned in the caption of the component

Security notes

- Never be tempted to use/share a datalake Account key in any script or automation. Only for temporary testing/debugging purposes this can be used.

- In order to set ACLs assignments, will need to have the Storage Blob Data Owner role assigned (at Storage account level). The storage account RBAC role Owner doesn’t allow you to set ACLs assignments. You first have to assign you the above role, and afterwards you can set ACLs rights.

Troubleshooting

In case you have the need to troubleshoot access problems using a basic request, you can use the below Python script, which needs a client Id, secret, container and path to test.

from azure.storage.filedatalake import DataLakeServiceClient

from azure.identity import ClientSecretCredential

tenant_id="ae549f63e-b8f6-4fe3-9f3e-2d9f63b7a"

dl_container = "raw"

dl_path = "input"

client_id="ba7019a77-e319-5554-b5d9-b8454e7019a5f8"

client_secret="xxxxx"

credential = ClientSecretCredential(tenant_id,client_id,client_secret)

service = DataLakeServiceClient(account_url="https://axisdl.dfs.core.windows.net/",credential=credential)

fsc = service.get_file_system_client(dl_container)

portalfolder = [path.name for path in fsc.get_paths(path=dl_path)]

for fp in portalfolder:

fct = fsc.get_file_client(file_path=fp)

print(fct.path_name)This will output the list of files in the path.