In Fabric Data Pipelines, the recommended activity to quickly ingest data is by using Copy Activity. This activity allows you to copy data from various sources to various destinations, when there is no need to (heavily) transform data in between. It allows you to load your Lakehouse from external sources, and in the next step work with the lakehouse data using various other technologies.

Problem

When your source is a JSON document, this component can struggle to ingest it.

Basic JSON

A basic JSON document representing of a list of records would look like:

[

{

"records" : [

{ "id" : "1", "company_code" : "CC", "company_description" : "Character Creator" },

{ "id" : "2", "company_code" : "MD", "company_description" : "Master Decisions" }

]

}

]It contains an array, which holds the records.

For this, you can easily define a Mapping by using Import Schema and the ingesting works perfectly.

JSON with multiple arrays

However, when your JSON document contains multiple arrays, the Copy Activity produces the carsetian product of all the arrays as output rows.

E.g. the following document results in 2×2 records:

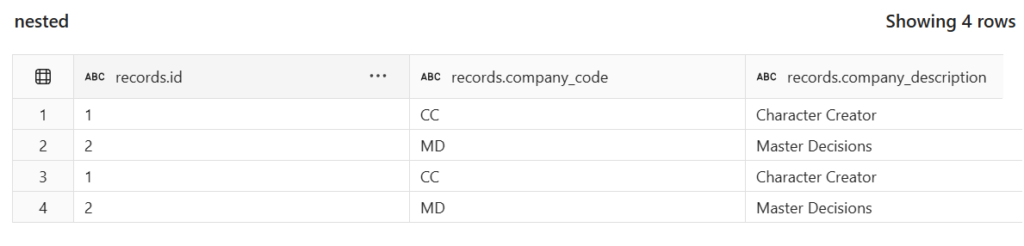

Creating all combinations of arrays as output:

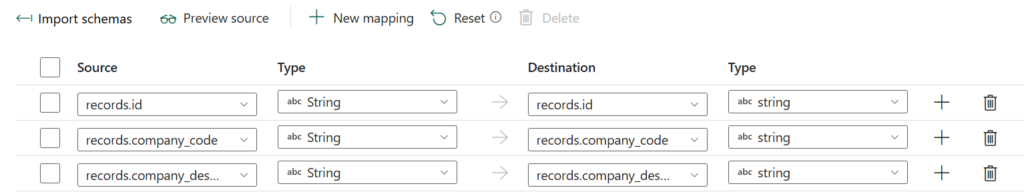

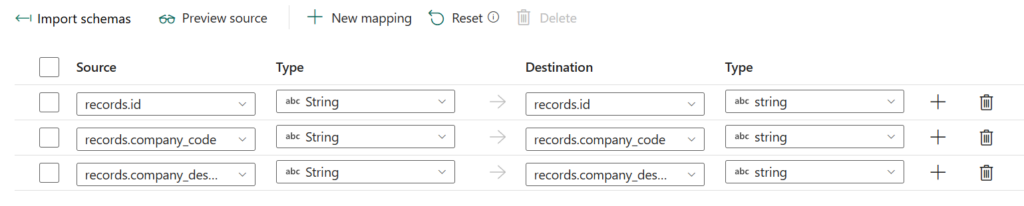

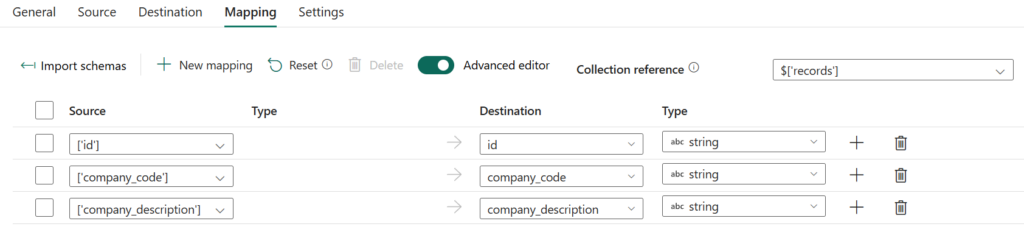

When you then setup the mapping of the fields you’re interested in, e.g. only 3 fields in the records array

You will end up with duplicate rows, since you didn’t map (ignored) the other values.

This behavior is quite different from how Synapse or ADF would process the same JSON in a copy activity.

Solution

If only we could start by specifying the array that we are actually interested in, like what was possible in Synapse, by using the Collection Reference field…

No destination

This field doesn’t exist in Fabric, unless you forget to specify the Destination. You can also clear out your current Destination and when you go back to Mappings, it is suddenly available.

You could add $[‘records’] in the collection reference, but when specifying a destination, the interface disappears again and the old mappings are back again.

Under the hood

What did strike me, is that when you look at the JSON definition of the pipeline, there is an attribute called “typeConversion” with the value “true” in the “translator” node.

What would happen if I put this to false, trying to minimize all kind of conversions or interferance by the activity?

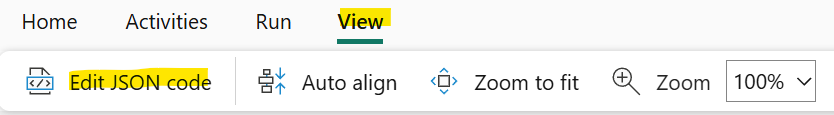

Make sure you have a Destination selected, and just edit the JSON document by clicking View and “Edit JSON code”

Change the typeConversion to false and click OK.

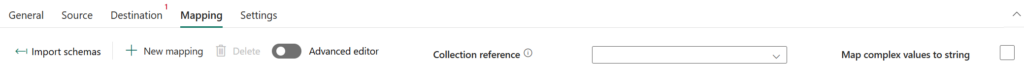

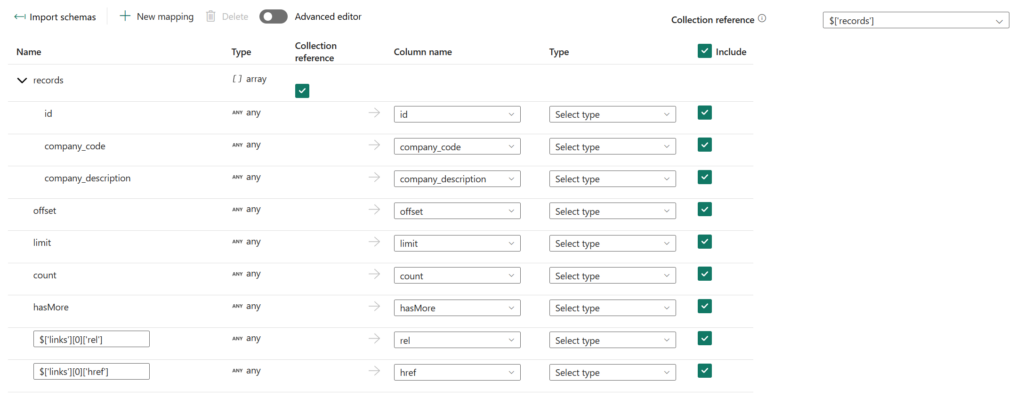

The Mapping panel now has the Collection Reference available, the advanced editor, and many more.

You can specify the source fields you’re interested in, and the copy activity will only copy those to the destination.

You have the ability to use shorter JSON path names, since the collection is available.

Even Import schema captures the structure of my JSON document

Now you can limit to the fields in the records section and not get cartesian product results anymore.

The result is now the expect set of records in my Lakehouse.

Conclusion

Manually setting typeConversion to false gets your access to the old Synapse / ADF mapping screen back and from my tests seems to work identical.

If it feels like a dirty hack, it probably is because it is one. I would expect this “feature” might disappear at some point, or be made available in an upcoming implementation of the multiple arrays in the Copy Activity in Fabric. Until then, this might just solve your problem.